Blog

Responsible AI Decision Making

1. Introduction

Can artificial intelligence (AI) make reliable and explainable decisions for businesses? Businesses and government agencies rely on making accurate decisions about customer interactions, product or service eligibility, workforce scheduling, and supply chain planning. How well does modern AI support this everyday decision making at the heart of every organization’s operations?

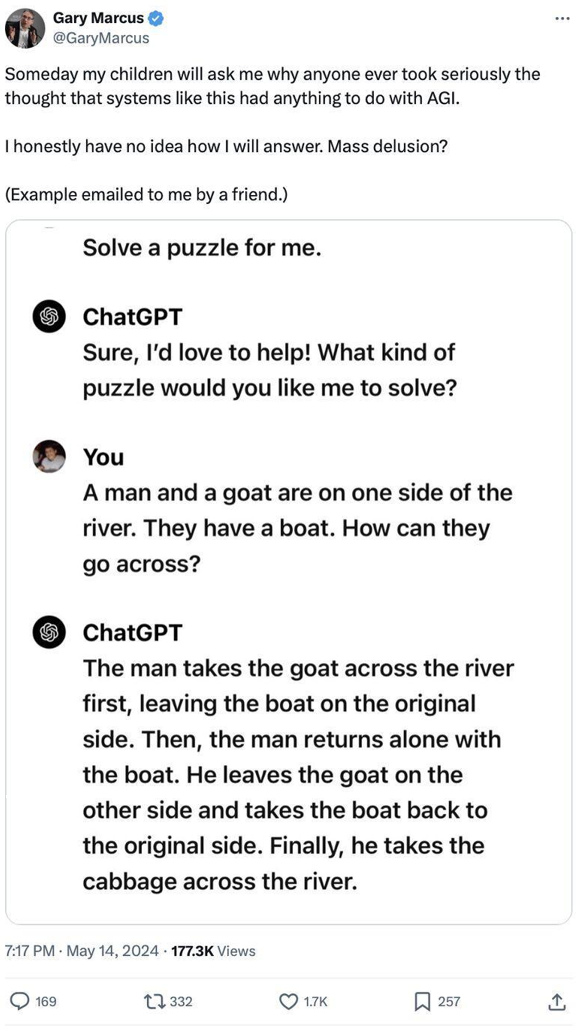

Modern Generative AI (GenAI) systems, such as Large Language Models (LLMs), appear to provide insightful answers to decision questions but operate by leveraging statistical patterns rather than by understanding meaning and applying formal reasoning (Fig. 1). This phenomenon, sometimes called the “Stochastic Parrot” effect, underscores the limitations of trusting such models in high-stakes scenarios.

Industries ranging from healthcare to finance increasingly rely on AI for decision-making, highlighting the urgency of recognizing GenAI’s potential as well as its limits. Techniques like Chain-of-Thought prompting (CoT) and Retrieval-Augmented Generation (RAG) improve AI outputs but do not address the fundamental absence of genuine reasoning. Hybrid approaches combining GenAI with explicit decision frameworks—such as rules engines, optimization tools, and simulations—offer a path toward more trustworthy outcomes.

Figure 1. GPT 4 imitating a common reasoning pattern without understanding (thanks to Gary Marcus)

2. Generative AI and Its Limitations in Autonomous Decision-Making

2.1 The “Stochastic Parrot” Dilemma

Generative AI language models work by predicting the next word in a sequence based on probabilities derived from typically massive training data. This probabilistic approach can yield human-like language fluency and broad information across many domains, but contains an inherent weakness in autonomous decision-making. Although LLMs may appear to reason through complex tasks, they actually perform advanced pattern matching rather than applying a grounded understanding of the world. They excel at generating coherent responses by identifying linguistic and conceptual correlations in massive text corpora, yet they lack the ability to verify factual correctness or interpret context in a semantic sense by applying formal rules or searching a constrained solution space for correct results. This gap becomes especially concerning when dealing with operational or strategic decisions that demand deep domain knowledge, ethical considerations, or strict regulatory compliance.

2.2 Hallucinations and Misleading Outputs

LLMs cannot verify the authenticity of their statements and sometimes invent non-existent facts—phenomena commonly referred to as “hallucinations” but which might better be called “confabulation”. Such output can be dangerously misleading when applied in regulated sectors like finance, government services, or healthcare, where even a small error can have significant consequences. The polished style of GenAI content makes it difficult for non-expert readers to discern inaccuracies, especially if they lack contextual knowledge. Because LLMs are designed to maximize the likelihood of producing coherent and engaging text, they can confidently fabricate details in the absence of corroborating data. Users may inadvertently rely on these misleading statements, increasing the risk of errors in critical decisions.

2.3 Biases in Training Data

Generative AI, like all machine learning techniques, mirrors and amplifies the biases embedded in its training set. Even before the advent of LLMs, companies such as Amazon encountered this issue when an ML-based hiring model perpetuated historical gender discrimination. Efforts to scrub problematic data or adjust model weights can mitigate biases to some degree, but many correlations remain deeply baked into large training corpora. Bias not only leads to discriminatory outcomes but also heightens legal and reputational risks, particularly in jurisdictions enforcing strict anti-discrimination laws. Human oversight and explicit logical constraints play crucial roles in identifying, evaluating, and correcting these hidden biases before they manifest in real-world decisions.

2.4 The Black Box Problem

GenAI engines – and machine learning models more generally – typically function as opaque “black boxes”, offering little insight into their internal reasoning. This opacity undermines accountability and trust, especially when AI systems shape critical organizational policies. Regulators increasingly demand that AI decisions be explainable and traceable; European Union legislation such as the AI Act calls for documented rationales behind “high-risk” AI outcomes. Traditional machine-learning methods—especially deep neural networks—generally resist straightforward interpretation because their internal weights do not correspond to concepts and measures that humans use for convincing explanations. Although Explainable AI (XAI) techniques and agentic workflows can highlight factors or trace high-level decision steps, they do not convert a black box into a transparent system. This challenge complicates both internal governance and external compliance, forcing businesses to reconcile the promise of GenAI with the legal and ethical need for explainability.

On the other hand, explicit reasoning technologies like business rules or constraints allow systems to provide sensible explanations based on the rules and ontologies used to describe them – making them highly accountable. However, these systems do not have the linguistic capabilities of LLMs. Maybe a combination of the two is a good approach? We will return to this topic in a bit.

2.5 Over-Reliance on AI

An over-reliance on GenAI can sideline important human expertise, ethical reasoning, and context-specific knowledge. While LLMs are adept at summarizing large volumes of text and identifying common patterns, they do not adapt automatically to ongoing regulatory changes, evolving business needs, or cultural nuances. In some cases, critical terms in contracts or policies require explicit modeling and careful version control. If these points are overlooked and delegated entirely to an LLM, mistakes can occur with far-reaching implications. Domain experts, meanwhile, remain essential for providing situational awareness and ensuring that AI outputs align with enterprise goals, legal constraints, and ethical considerations.

2.6 Managing Change

The real world changes every day: Laws and regulations are introduced or modified; new products come on the market; new competitors appear requiring changes in marketing and customer engagement. AI models based on statistical analysis of the past are stymied when the present changes in significant ways; the examples used for training are no longer relevant, and it can take months to generate new training data – much longer than needed to respond to changes in the environment. Indeed, if systems are automated using the old models, it may even be impossible to find training examples that take correct decisions using the updated rules or situation. In these cases, explicit representation of the rules and procedures in the real world are needed along with robust governance.

3. Enhancing AI Reasoning with Advanced Techniques

These limitations on Gen AI models have been observed since they were introduced, and indeed many are also present in all statistical or machine learning approaches to AI. In the LLM world, several approaches have been suggested to increase the accuracy, reasoning capability, and timeliness of Gen AI model inference. Here is a quick rundown of some of these approaches.

3.1 Chain-of-Thought (CoT) and Tree-of-Thought (ToT)

Chain-of-Thought prompting requires a model to outline intermediate steps before arriving at a conclusion, potentially reducing errors by making the reasoning process more explicit. Tree-of-Thought expands on this idea by exploring multiple candidate chains in parallel, though the added branching paths raise computational overhead. While both CoT and ToT can help clarify the model’s responses, they do not guarantee logical or factual correctness. Because LLMs remain reliant on their original training data and core architecture, they may still produce faulty reasoning if the question involves novel constraints or complex logic absent from familiar patterns. While these techniques significantly improve reasoning capabilities, they come at a cost. Recursively calling an LLM, particularly for CoT or ToT workflows, increases computational requirements, resulting in higher energy consumption and API usage fees. It can also magnify the impact of hallucinations – by reinjecting the results of earlier inferences into the LLM, any early hallucinated results will simply be recursively integrated into the “chain of thought”.

3.2 Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation addresses the problem of outdated or incomplete model knowledge by incorporating external data sources at inference time. Instead of relying solely on pre-trained parameters, the application that calls the LLM first consults a database, search engine, or knowledge base to augment its context before providing a more complex prompt to the LLM. This can mitigate certain hallucinations by supplying updated or domain-specific facts in the prompt. However, if the retrieval system does not locate all relevant records or fails to distinguish critical content from extraneous text, the AI may still produce incomplete or incorrect answers. Implementing RAG effectively thus requires strong metadata, well-curated repositories, and robust indexing or search algorithms.

3.3 Function Calling and Agentic Workflows

Function calling and agentic workflows integrate LLMs with external APIs or directed graphs of specialized tasks. These capabilities allow AI systems to consult up-to-date services, perform specific computations, or verify certain facts. For instance, an LLM can fetch current weather information or real-time stock prices rather than relying on stale training data. While agentic workflows automate multi-step processes by delegating tasks between different “nodes” (e.g., rule engines, LLM calls, or domain-specific applications), proper governance is crucial. If constraints are not well-defined or if the AI is given too much autonomy, unexpected outcomes may arise, leading to safety or liability concerns.

This capability is exciting: The ability to identify external services to call opens a very strong potential approach to making hybrid systems that incorporate true reasoning engines into the flow of an LLM-based application to combine the linguistic prowess of LLMs with more accurate, explainable, and manageable decision-making technology like business rules and optimization, as we will see below.

4. Hybrid AI Approaches for Reliable Decision-Making

4.1 Integrating Generative AI with Decision Models

Because GenAI alone struggles with factual reliability, explainability, and context-sensitivity, many organizations embrace hybrid strategies that pair LLM capabilities with structured logic engines, optimization tools, or simulation systems. In such an architecture, GenAI excels at parsing unstructured inputs—for example, summarizing legal documents or extracting relevant policy references. Once data is structured, explicit models such as Business Rules Management Systems (BRMS) and Mathematical Optimization engines apply verified constraints or performance criteria. This hybrid approach prevents the “stochastic” outputs of an LLM from overriding legal or ethical imperatives and allows domain experts to tailor rules as needed for regulatory changes, corporate priorities, or new market conditions.

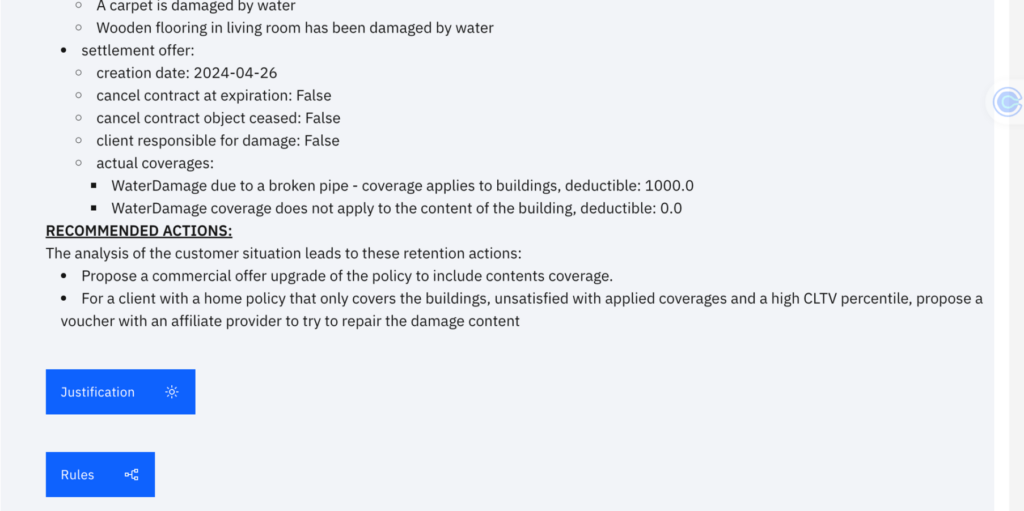

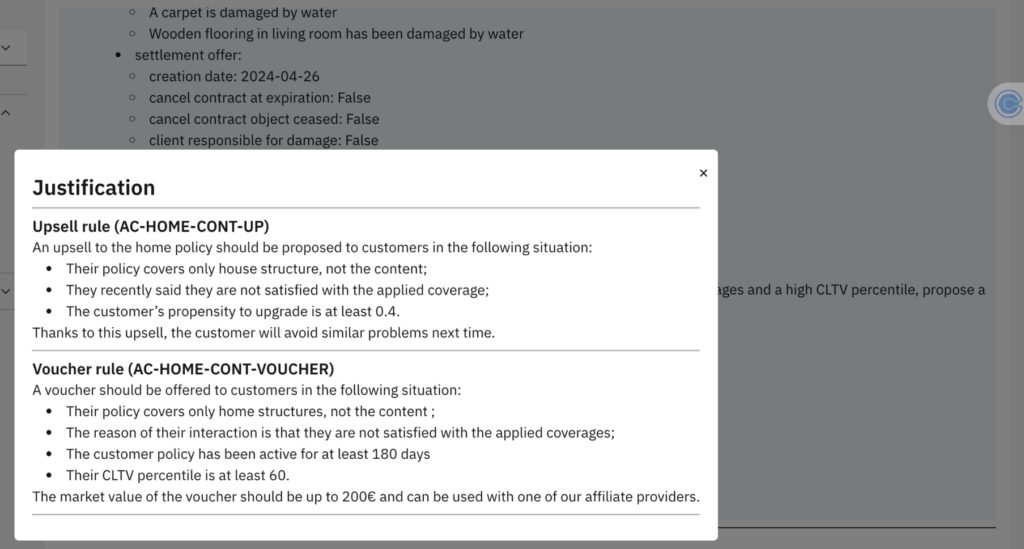

4.2 Illustrative Example: Insurance Claims

An insurance company might use an LLM to parse water-damage incident reports, extracting details like policy numbers, timeframes, and damage descriptions. The LLM then passes these structured findings to a rules engine that determines eligibility and recommended payouts based on contract terms, claimant histories, and local regulations. If the LLM tries to introduce extraneous or speculative information—perhaps imagining coverage that does not exist—the rules engine will override it, ensuring the final decision aligns with verified guidelines. By combining human oversight and explicit business rules with GenAI’s data extraction abilities, insurers enjoy faster claims processing without sacrificing consistency, explainability, or compliance.

When dealing with customer interactions regarding insurance claims, a hybrid approach can also help (Fig. 2-3):

- Customer emails or messages can be interpreted by an LLM to determine intention and extract key information.

- Tool-calling and RAG can then enhance this information with data from a core insurance system and CRM to provide complete context. This data can also include customer segmentation and scoring information generated by machine learning models.

- This contextual data can then be given to a rule-based system to ensure compliance with corporate policies regarding how to handle customers, including rules about differential handling for various customer segments and ensuring both responsiveness and profitability.

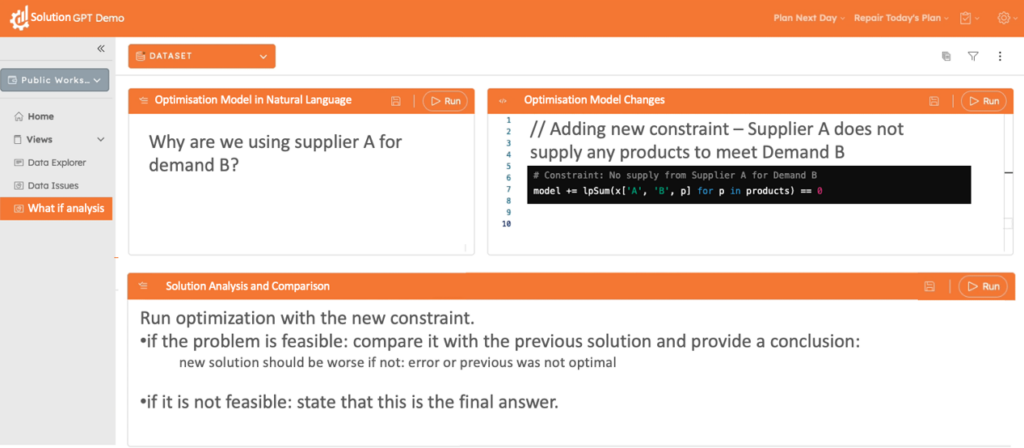

4.3 Illustrative Example: “What-If” Scenario Analysis in Supply Chain Modeling

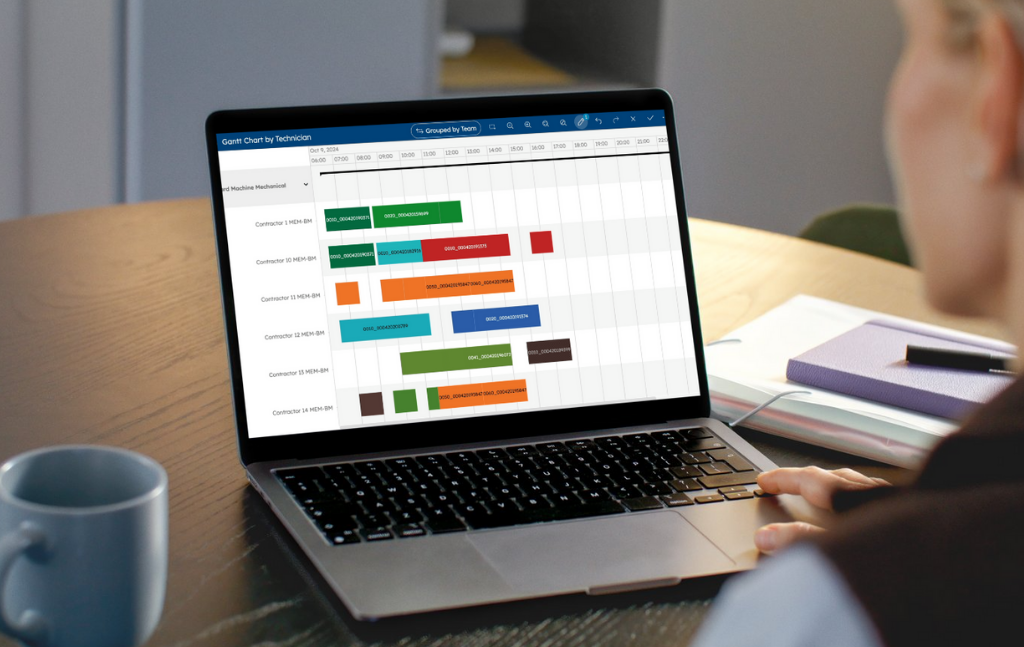

Fig. 4 illustrates how an LLM-driven interface allows non-technical users to explore “What-If” scenarios in an optimization model. In this example, the user poses a natural language question—“Why are we using supplier A for demand B?”—prompting the system to modify constraints in the underlying solver. Rather than relying on guesswork, the LLM translates the user’s request into symbolic logic, adds a constraint to block supplier A from serving demand B, and reruns the solver to compute a feasible or optimal solution.

If the problem is feasible, the system compares its new solution to the previous one, highlights any trade-offs, and presents concise explanations in human-readable language. Should the problem prove infeasible, the system identifies this outcome as final—ensuring clarity about why certain demands cannot be met under the new constraints. By seamlessly uniting LLM-based parsing with formal optimization techniques, this workflow empowers business users to test assumptions, explore alternatives, and make informed decisions without extensive technical expertise.

5. Benefits of Generative AI in Decision-Making

GenAI delivers considerable advantages when used in tandem with structured decision tools. Models can analyze unstructured textual or image data—from emails to contracts to user-generated content—and summarize essential insights and extract structured information. This speeds up processes such as market research, competitor analysis, and quality control, freeing employees from laborious reading tasks. It also democratizes data-driven decision-making by providing straightforward, conversational interfaces that non-technical staff can engage with. In software development, LLMs can suggest code snippets, documentation updates, or test cases, expediting prototyping and reducing repetitive workloads.

When integrated effectively, GenAI amplifies human expertise by extracting structured information from unstructured data, surfacing relevant facts and patterns, and allowing professionals to focus on complex tasks that require deep judgment, creativity, or empathy. A system might flag potential legal or contractual conflicts that a busy professional could otherwise miss, but final decisions remain subject to human review and explicit logic checks. This synergy enables organizations to harness the strength of AI-based pattern matching while upholding safety, fairness, and compliance principles.

6. Conclusion

Generative AI has demonstrated significant potential, in particular for processing and generating unstructured data such as text and images, and can be treated as a missing, secret ingredient to transform decision-making processes across various fields. Throughout this article, we have explored how Generative AI enhances decision-making by minimizing human biases and errors, accelerating processes, democratizing data access for non-technical users, and stimulating creativity and innovation. These benefits highlight Generative AI’s ability to augment human capabilities, making organizations more efficient and effective in their decision-making endeavors.

However, Generative AI alone has significant limitations. Its reliance on statistical patterns without genuine understanding means it cannot make truly compliant decisions, especially in strategic business contexts. To overcome these limitations, hybrid AI approaches that integrate GenAI with symbolic decision systems—such as Business Rules, Optimization, and Simulation tools—offer a robust framework for reliable decision-making.

The collaboration between Generative AI, those systems, and human oversight underscores the importance of human-AI partnership. Humans provide necessary oversight, contextual understanding, and ethical considerations, ensuring that AI-assisted decisions are accurate, fair, and contextually appropriate. This symbiotic relationship is essential for navigating the complexities of modern decision-making, effectively leveraging both human insight and AI capabilities – and creating truly Accountable AI-based Systems.

Further Reading

Here are some articles and papers that we have found particularly interesting to help better understand the challenges and potential of using Generative AI and symbolic techniques to make more accountable and powerful AI-based systems.

- Syed Ali. ‘GenAI: The Future of Decision Making.’ LinkedIn, 2024. https://www.linkedin.com/pulse/genai-future-decision-making-syed-ali-gb4pc

- Yuhang Wang, et al. ‘Machine Learning and Information Theory Concepts towards an AI Mathematician.’ arXiv preprint arXiv:2403.04571v1, 2024. https://arxiv.org/html/2403.04571v1

- Emily M. Bender, et al. ‘On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?’ Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, 2021. https://dl.acm.org/doi/pdf/10.1145/3442188.3445922

- King & Wood Mallesons. ‘Risks of Gen AI: The Black Box Problem.’ King & Wood Mallesons, 2024. https://www.kwm.com/au/en/insights/latest-thinking/risks-of-gen-ai-the-black-box-problem.html

- University of Michigan-Dearborn. ‘AI’s Mysterious Black Box Problem Explained.’ University of Michigan-Dearborn News, 2024. https://umdearborn.edu/news/ais-mysterious-black-box-problem-explained

- John Smith, et al. ‘Explainable AI – XAI.’ arXiv preprint arXiv:2404.09554v1, 2024. https://arxiv.org/html/2404.09554v1

- Forbes Technology Council. ‘Decision Making 2.0, Powered By Generative AI.’ Forbes, February 14, 2024. https://www.forbes.com/councils/forbestechcouncil/2024/02/14/decision-making-20-powered-by-generative-ai/

- DecisionSkills.com. ‘Decision Making and Generative AI: Benefits and Limitations.’ DecisionSkills.com, 2024. https://www.decisionskills.com/generativeai.html

- Harvard Business Review. ‘How AI Can Help Leaders Make Better Decisions Under Pressure.’ Harvard Business Review, October 2023. https://hbr.org/2023/10/how-ai-can-help-leaders-make-better-decisions-under-pressure

- World Economic Forum. ‘Causal AI: The Revolution Uncovering the ‘Why’ of Decision-Making.’ World Economic Forum, April 2024. https://www.weforum.org/agenda/2024/04/causal-ai-decision-making/

- Diwo.ai. ‘What are the Differences Between Generative AI and Decision Intelligence?’ Diwo.ai, 2024. https://diwo.ai/faq/generative-ai-vs-decision-intelligence/

- Pierre Feillet. ‘Approaches in Using Generative AI for Business Automation: The Path to Comprehensive Decision Automation.’ Medium, August 4, 2023. https://medium.com/@pierrefeillet/approaches-in-using-generative-ai-for-business-automation-the-path-to-comprehensive-decision-3dd91c57e38f

- Rob Levin and Kayvaun Rowshankish. ‘The Evolution of the Data-Driven Enterprise.’ McKinsey & Company, July 31, 2023. https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/tech-forward/the-evolution-of-the-data-driven-enterprise

- Philip Meissner and Yusuke Narita. ‘How Artificial Intelligence Will Transform Decision-Making.’ The Choice by ESCP, December 5, 2023. https://thechoice.escp.eu/tomorrow-choices/how-artificial-intelligence-will-transform-decision-making/

- McKinsey & Company. ‘Artificial Intelligence in Strategy.’ McKinsey & Company, January 11, 2023. https://www.mckinsey.com/capabilities/strategy-and-corporate-finance/our-insights/artificial-intelligence-in-strategy

- Business Reporter. ‘Why Generative AI Is the Secret Sauce to Accelerate Data-Driven Decision-Making.’ Bloomberg, 2024. https://sponsored.bloomberg.com/article/business-reporter/why-generative-ai-is-the-secret-sauce-to-accelerate-data-driven-decision-making

- Runday.ai. ‘Can Generative AI Make Independent Decisions?’ Medium, July 12, 2024. https://medium.com/@mediarunday.ai/can-generative-ai-make-independent-decisions-d20db8412151

- Nelson F. Liu, et al. ‘Lost in the Middle: How Language Models Use Long Contexts.’ arXiv preprint arXiv:2307.03172, 2023. https://arxiv.org/abs/2307.03172

- Emily M. Bender, Timnit Gebru, Angelina McMillan-Major, and Shmargaret Shmitchell. ‘On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?’ Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, 2021. https://dl.acm.org/doi/pdf/10.1145/3442188.3445922

- Rossi F. and Mattei M. ‘Building Ethically Bounded AI’ arXiv preprint arXiv: 1812.03980, 2018. https://arxiv.org/abs/1812.03980

At DecisionBrain, we deliver AI-driven decision-support solutions that empower organizations to achieve operational excellence by enhancing efficiency and competitiveness. Whether you’re facing simple challenges or complex problems, our modular planning and scheduling optimization solutions for manufacturing, supply chain, logistics, workforce, and maintenance are designed to meet your specific needs. Backed by over 400 person-years of expertise in machine learning, operations research, and mathematical optimization, we deliver tailored decision support systems where standard packaged applications fall short. Contact us to discover how we can support your business!

Filippo Focacci

Co-founder & CEO, DecisionBrain

About the Author

Before founding DecisionBrain, Filippo Focacci worked for ILOG and IBM for over 15 years where he held several leadership positions in Consulting, R&D, Product Management and Product Marketing in the area of Supply Chain and Optimization. He received a Ph.D. in Operations Research (OR) from the University of Ferrara (Italy) and has over 15 years experience applying OR techniques in industrial applications in several optimization domains. He has published Supply Chain and Optimization articles for several international conferences and journals. You can reach Filippo at: [email protected]

Kajetan Wojtacki

Sr. Research Engineer, DecisionBrain

About the Author

Kajetan Wojtacki is a Senior Research Engineer at DecisionBrain (since 2022), specializing in Python development, AI, and machine learning. He has extensive experience in high-performance computing, numerical modeling, and data-driven analysis, bridging disciplines such as physics, mechanics, biology, and data science. Previously, he served as an Associate Professor at the Polish Academy of Sciences and as a postdoctoral fellow at CNRS, being involved in multidisciplinary projects that applied analytical and numerical modeling as well as deep learning. He has published in leading international journals, presented at global conferences, and collaborated with top scientists. Kajetan holds a BEng & MEng in Applied Physics from Gdansk University of Technology, and a PhD in Mechanics and Civil Engineering from the University of Montpellier. You can reach Kajetan at: [email protected]

Harley Davis

Founder & CEO, Athena Decision Systems

About the Author

Harley Davis has been a leader in the AI-based decision space for 35 years. As product leader for IBM Decision technology and IBM watsonx Orchestrate, Harley led the creation of the next generation of symbolic AI. As head of IBM France R&D, Harley forged deep partnerships with leading universities and researchers in AI. As sales leader and professional services leader at ILOG and IBM, Harley helped define and create the market for business rule technology and worked with dozens of leading companies to implement decisioning systems. Harley has a BS and MS from MIT in Artificial Intelligence and Computer Science. You can reach Harley at: [email protected]