Blog

3 Case Studies on Production Bottleneck Management in the Semiconductor Industry

Industry analysts were recently predicting that it would take at least until the end of 2023 for the semiconductor chip shortage to subside. That was before Russia invaded Ukraine. This has only made the outlook more dire because, for instance, the semiconductor industry relies on Russia and Ukraine to supply critical raw materials like neon and palladium, according to Techcet . The growing list of sanctions will undoubtedly exacerbate semiconductor supply issues.

Adjusting Manufacturing Processes and Supply Chains

The industry is now scrambling to evaluate alternative materials and sourcing strategies for semiconductor chips, materials and equipment. As we learned during the early months of the pandemic, many large-scale manufacturing and supply chain processes have been designed to favor efficiency over resilience. This means that even small process adjustments, like sourcing from different material suppliers, changing plant product mix, or adjusting staffing levels can have outsized impacts on throughput.

To combat these risks, semiconductor industry participants must be more nimble and adept at identifying and managing the bottlenecks in their processes and supply chains.

Managing Bottlenecks

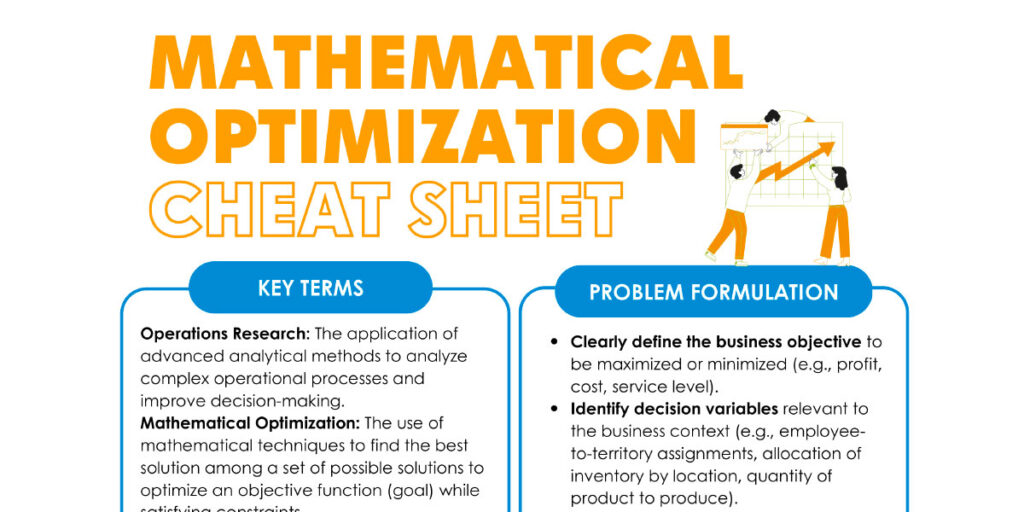

A bottleneck is a throughput-limiting part of a process. Bottlenecks always occur in production, distribution, fulfillment and elsewhere within a supply chain. Practitioners of operations management, or its more academic cousin, operations research, typically identify bottlenecks by analyzing the dynamics of the production system with respect to 4 different characteristics:

- Accumulation analysis: when a material or part comes into your supply chain or manufacturing process at a faster rate than the production process can handle and sits in some sort of queue.

- Wait Time analysis: This is the inverse of Accumulation, in which there is a hold-up somewhere in the process – waiting for a part to come in or waiting for a machine to complete its part of the process.

- Throughput analysis: this is when the amount of product produced over a specified time period is not achieving its fullest potential but could be improved through some tuning of the different steps.

- Full Capacity Analysis: when none of the above applies because all steps in the chain/process are at full capacity but throughput objectives still aren’t being met.

Bottlenecks are particularly hard to manage in the semiconductor industry for the following reasons:

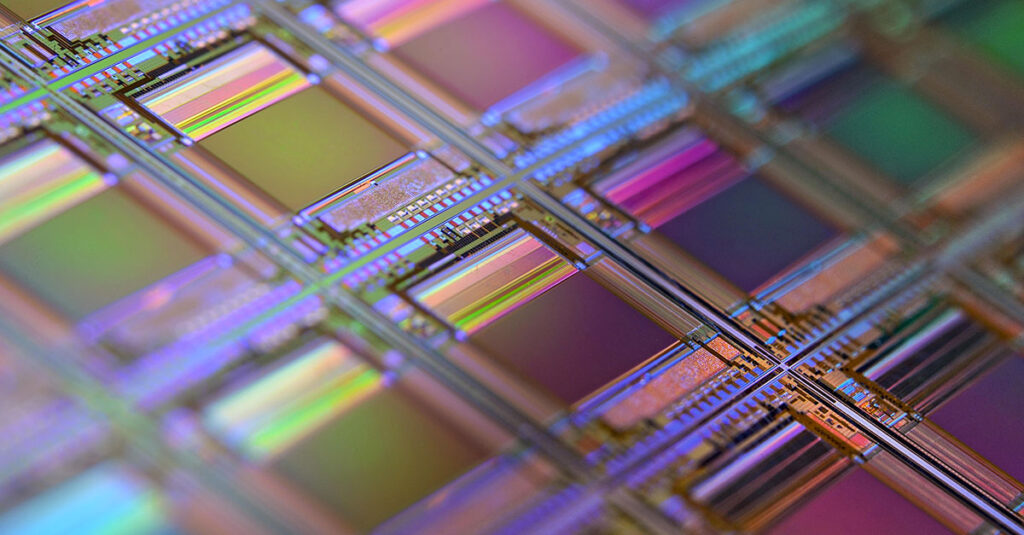

- Long and complex production processes: This paper by Intel states that “A microprocessor is the most complex manufactured product on Earth.” Even related products, like semiconductor equipment, circuit boards and other electronic devices can have very long production processes with a high number of production steps, long lead times, and interrelationships between workers, supply and demand.

- Bottlenecks are constantly moving: bottlenecks in the semiconductor industry are highly dependent on the product mix. If the product mix is not well controlled, the bottleneck moves, the theoretical bottleneck is under-utilized, the throughput decreases and the lead time increase. But it’s very difficult to control product mix when we face high variability in customer demand … in a post-pandemic and post-Russia/Ukraine world, the rate of change and volatility of everything seems to be increasing.

- New Production Lines can take years: to stand up and can require enormous amounts of capital, and therefore, debottlenecking programs can not easily be addressed.

Agility is the only effective way to increase throughput and manage costs in today’s dynamic environment. Organizations and their systems must support rapid detection of bottlenecks, the evolution of processes and granular measurement of performance. These capabilities are critical for making the right business decisions today and preparing for the various possibilities that tomorrow will bring.

In this paper, we present three case studies on how specific semiconductor supply chain bottlenecks were handled by three manufacturers of semiconductors or electronics components. All three examples illustrate how optimization-based systems together with analytics tools can support organizations in incrementally improving their processes through the management of bottlenecks. At the end of the paper, we also explain a bit about how optimization-based systems work.

Case Study 1 – IBM Bromont: Planning Adjustments Opened the Supply Bottleneck

IBM’s Bromont facility is an assembly and test plant that packages and tests the silicon devices for several external customers. The plant is responsible for assembling 2000 new products every year different products using 20-50 production steps. A new production batch enters the assembly line about every three minutes and new part numbers are assigned every two hours. Around $700 million worth of products are exported annually.

Cycle time is a key competitive advantage for IBM, however, a combination of labor, material, and component shortages have led to delivery delays. On the labor side, for instance, they were understaffed some weeks and overstaffed other weeks. On the raw materials and components side, they faced rapid changes to availability, lead times, and order limits of these products. They wanted to enhance their long-term planning system to better balance weekly labor workload and optimize their materials and parts sourcing to balance cost, efficiency, and resilience and to reduce their Cycle times.

IBM worked with DecisionBrain to develop a next-generation optimization-based long-term planning system. The system is integrated with internal asset management and order management systems to take in component supply data, customer order data, personnel and factory constraints, such as machine capacity, team capacity, and processing times. Production process step timelines were also supplied. The optimization problem was modeled as a set of objectives, such as meeting order delivery promises, minimizing costs, and balancing the workload. The long-term planning also considers the key manufacturing constraints, like those mentioned above.

Under the covers, this input data was fed to IBM’s own CPLEX optimization engine, which uses mathematical approaches to quickly narrow down the billions of possible plans. Production and supply constraints were considered simultaneously, ultimately yielding a set of candidate plans that optimize across the competing objectives. The main output of the optimization engine is the combination of the production planning and the material sourcing instructions: how many of which SKUs to order and when. The planner could evaluate the plans side-by-side to understand the KPI-level tradeoffs, make manual adjustments and finally select the plan to deploy.

One of the benefits of the project was a 20% reduction in the variability of workforce demand, which resulted in lower contract labor costs and improved employee satisfaction.

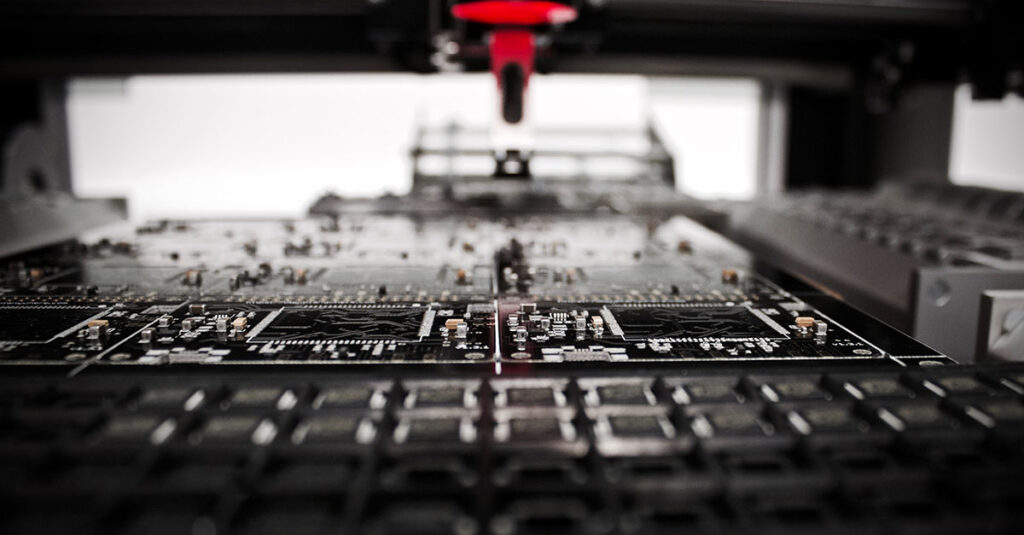

Case Study 2 – Holistic Planning & Scheduling for PCB Manufacturer

Production planning and scheduling has always been critical to this producer of printed circuit boards (PCBs). Their processes are very complex, however. This is why they employ about 100 skilled planners.

In order to break the problem up into manageable chunks, planners are responsible for specific portions of the planning and scheduling process, such as a specific workstation or set of machines. This planning work is mostly performed in Excel.

They want to be able to take a more holistic approach to optimizing their plans and daily schedules. This means they have to simultaneously consider things like sequencing rules, material availability, constraints like setup costs, total throughput and order fulfillment objectives. They also need the ability to quickly make adjustments to the schedules without causing negative consequences downstream. This is particularly challenging given the high degree of interconnected in their production flow.

Their plant has between 20 and 60 work centers, the most complex of which relies on over 100 machines. A single PCB can have up to 4 layers and up to 102 different production steps within re-entrant processes that may visit the same work center several times.

One bottleneck analysis identified 27 work centers as having both accumulation and wait time bottlenecks. For instance, one work center includes 5 surface finishing machines dedicated to finishing only a single type of metal (gold, silver, tin, etc). To avoid creating a bottleneck at this work center, upstream work centers must feed the right mix of different metals so that one machine doesn’t become overloaded while another is under-utilized.

Adding to the scheduling complexity is determining optimal order sequence mix. Put another way, how can planners manage the tradeoff between material changeover costs and order fulfillment? While it may be more cost-effective and expedient to run a large batch of product X followed by a large batch of product Y (X,X,X,Y,Y,Y), it may sometimes be better to interleave the orders by scheduling, say, X,X,Y,X,Y,Y in order to meet customer promise dates. Where that optimal balance lies is hard to determine and changes on a near-constant basis.

This client has been working with DecisionBrain to implement an optimization-based system that tackles the problem in two integrated steps. First is a mid-term (monthly and weekly/daily) planning step that will create the high-level plan at the work center level based on actual and forecast orders. This is the starting point that will feed the operational scheduling system in phase 2.

Planning

The short and mid-term planning system was implemented using the CPLEX optimization engine, which uses powerful Mixed Integer Programming (MIP) algorithms. Because of the sheer size of the problem and law of diminishing returns, developers tuned the model to create a very good (well optimized) plan with a reasonable level of granularity of factors to be considered. This mathematical model has a holistic view of all work centers, part numbers and customer demands. It can globally control the bottlenecks, for example, making sure that the theoretical bottleneck is always fully utilized, and has the required WIP buffer to absorb process variability. At the same time, it optimizes operational efficiency (through the minimization of changeover times) and customer delivery dates.

Scheduling

The daily scheduling system takes in work orders from the planning system and then uses an optimization engine with algorithms better tuned to operational scheduling programs, called constraint programming (CP). CP algorithms are good at solving sudoku-like puzzles with many constraints, like product machine compatibility rules, setup times, min-max delay times, etc… As with all optimization engines, no matter the algorithm, goals or objectives also have to be factored in, such as minimizing cost and meeting due dates.

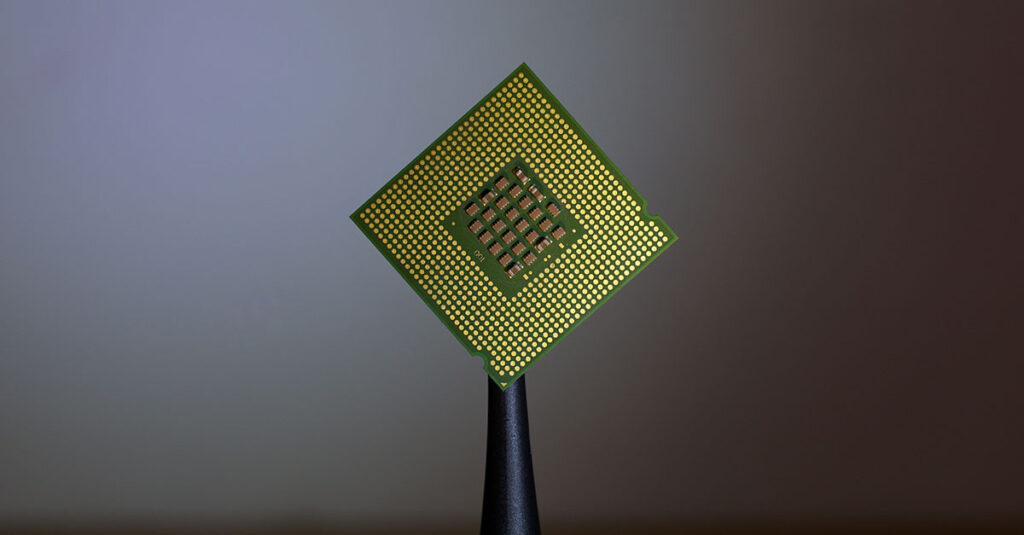

Case Study 3: Capacity Planning System for Chip Manufacturer

One of the world’s largest chip makers. They were limited by a spreadsheet-based system that utilized an embedded optimization solver. The problem was that the system was not flexible enough to handle new requirements, like the ability to fulfill partial orders. Over time, this very big spreadsheet became more complex and fragile and therefore difficult and risky to maintain.

Some of the objectives of the long-term planning system enhancement were:

- support managers in defining the best routing to product

- increase overall production efficiency/throughput

- better identify bottlenecks so that corrective action can be taken and expenditures on additional resources can be allocated optimally

- support partial order fulfillment

DecisionBrain implemented the new planning system using IBM CPLEX and IBM Decision Optimization Center (DOC) . Among the factors that were considered by the new system were:

- Customer demand

- RTT (Run to Target), also known as centerlining, is a lean approach to reducing process variability while increasing machine efficiency.

- OEE (Overall Equipment Effectiveness), i.e., (% Uptime) x (% Speed) x (% Good-quality products)

- Yield: % working chips

- Number of available resources

- Resource capacities

By exposing so many additional optimization “levers” to the users, planners and analysts could easily test out the impact of various model changes. This is thanks not only to the built-in what-if scenario analysis of the DecisionBrain platform but also to the integration of a rule engine. This engine allows users to try, say, different potential resources capacities. The engine then fires by generating additional data sets that feed the optimization solver. The resulting schedule “goodness” is evaluated based on a variety of KPIs.

About Optimization-Based Tools and Systems

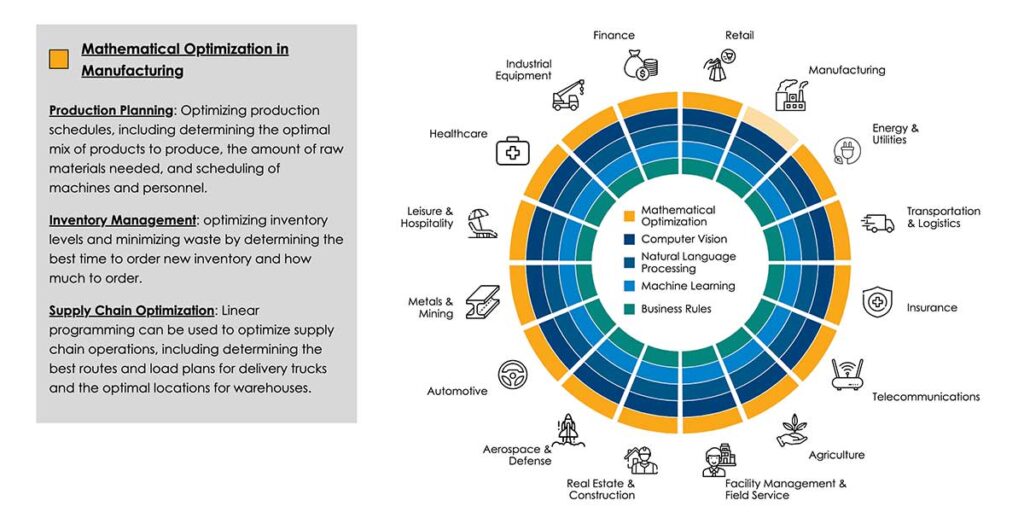

Optimization-based tools that rely on algorithms discussed here, like linear programming (LP) and constraint programming (CP) have been used for decades across industries to solve the most advanced planning, scheduling, routing, logistics, and other optimization problems. They are used to determine airline seat pricing, schedule professional sports games, arrange products on store shelves, select locations for stores and warehouses, group and route parcel deliveries and determine the best marketing offer to present to a consumer.

The best-known such engine, IBM CPLEX, uses advanced mathematical algorithms, like Mixed Integer Linear Programming and others. Developing such a system used to always require the participation of highly skilled operations researchers or “OR” programmers. These OR experts would work with the business to divide business problems into parts and then translate the problem parts into a set of hard constraints, soft constraints (or wishes), and objectives (what to maximize or minimize). These inputs would then be fed into CPLEX, which would run on one or more servers. Given some data, the optimization engine would chug away for a few seconds or minutes and ultimately suggest the optimal plan, schedule, route, or task sequence. Data engineers and application developers would also be involved to integrate the data sources, create the interfaces and deploy the application.

Many application vendors saved organizations the hassle of building such systems by developing pre-built use-case-specific applications for common functions like production planning, workforce scheduling, route planning, price optimization, network design, and the list goes on. These applications have a lower price point but quickly run into functional limitations when organizations’ requirements change.

For specialized industries with few players and unique requirements, like the semiconductor industry as well as port operators, airlines, professional sports leagues, cruise operators, utilities providers and many others, these organizations tend to still use custom-built solutions, many of which are built on top of IBM CPLEX.

Nowadays, it takes a fraction of the time to develop these custom applications. Here at DecisionBrain, we average about 90 person-days per custom development project. This is thanks to new tools that offer a modular, hybrid approach of pre-built modules without sacrificing flexibility and future adaptability.

The Hybrid Solutions: IBM DOC and DecisionBrain DB Gene

With decades of collective optimization experience, the team at DecisionBrain has created a development platform, called DB Gene , for quickly creating high-ROI analytics and optimization solutions with a low TCO. DB Gene’s modular approach brings pre-built functionality, such as:

- Advanced web User Interface (UI)

- What-if scenario analysis capabilities

- Integrated user management

- Parallel processing and execution monitoring

- Containerized deployment

- Integrated security features

Read more about our case studies:

Smart Planning and Scheduling for Electronics

Planning for Semiconductors

References:

From Sand to Silicon “Making of a Chip”: https://download.intel.com/newsroom/kits/chipmaking/pdfs/Sand-to-Silicon_32nm-Version.pdf

IBM Canada Business Profile: https://www.c2mi.ca/en/partenaire/ibm/

About the Author

Filippo Focacci, PhD is Co-founder and CEO at DecisionBrain. Before founding DecisionBrain, Filippo worked for ILOG and IBM for over 15 years where he held several leadership positions in consulting, R&D, product management and product marketing in the area of supply chain and optimization. He received a Ph.D. in operations research from the University of Ferrara (Italy) and has over 15 years of experience applying O.R. techniques in industrial applications in several optimization domains.

About the Author

Issam Mazhoud has 10 years of experience in optimization, with a specialization in manufacturing and supply chain planning and scheduling. He works at DecisionBrain as an Analytics & Optimization Specialist and has a Ph.D. in Industrial Engineering and Optimization from the Grenoble Institute of Technology.